The Evolution of Compute: Transitioning from Cloud to DePIN Networks

The Journey from Cloud to DePIN

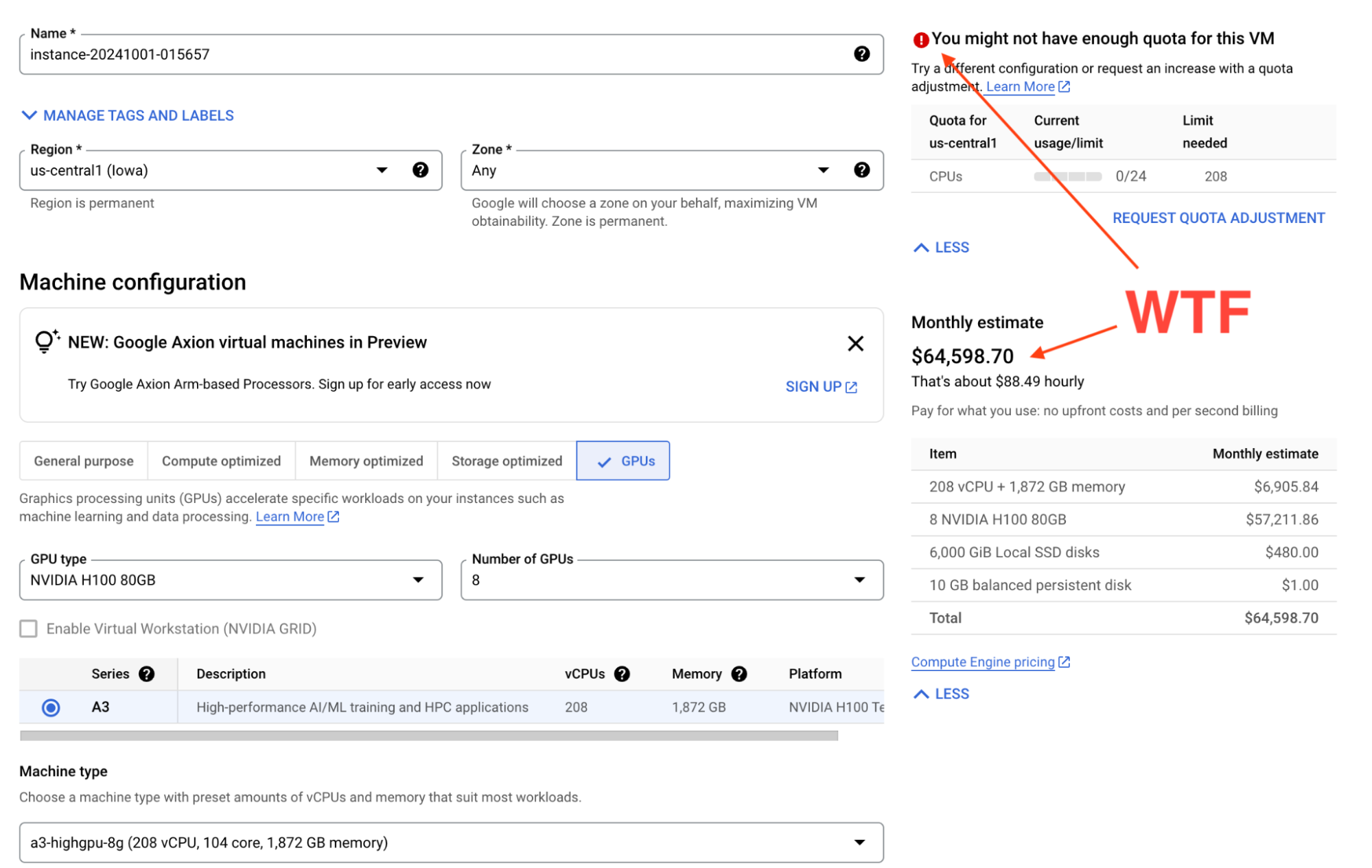

To understand the value proposition of DePIN (Decentralized Physical Infrastructure Networks), it helps to start with the evolution of cloud computing. When cloud services emerged, they promised scalability, agility, and—most importantly—cost efficiency. In practice, the experience doesn't always match up, especially in the world of GPUs. For instance, using Google Cloud to benchmark GPU instances can be frustratingly restrictive, with limits on regional availability, GPU quotas, and credits that conveniently don't apply to the services you actually need. These constraints often get in the way of experimenting or scaling efficiently.

The Realities of Cloud Promises

The cloud came with several promises that revolutionized how we think about computing:

| Cloud Promise | Description | Reality |

|---|---|---|

| Scalability & Agility | Resources can be rapidly scaled to meet changing demands. | Partly True. Vendor lock-in is real |

| Cost Efficiency | Pay-as-you-go model, eliminating upfront hardware investments. | False. Cloud can be extremely expensive |

| Innovation & Flexibility | Access to cutting-edge tech for fast experimentation. | False. No access to entry-level GPU |

| Reliability & Security | Global data centers ensure data availability and security. | True. CSPs take durability, availability, and security very seriously |

| Global Reach | Facilitates global collaboration through worldwide infrastructure. | True. Easy access to various regions |

On-Demand Compute Marketplaces

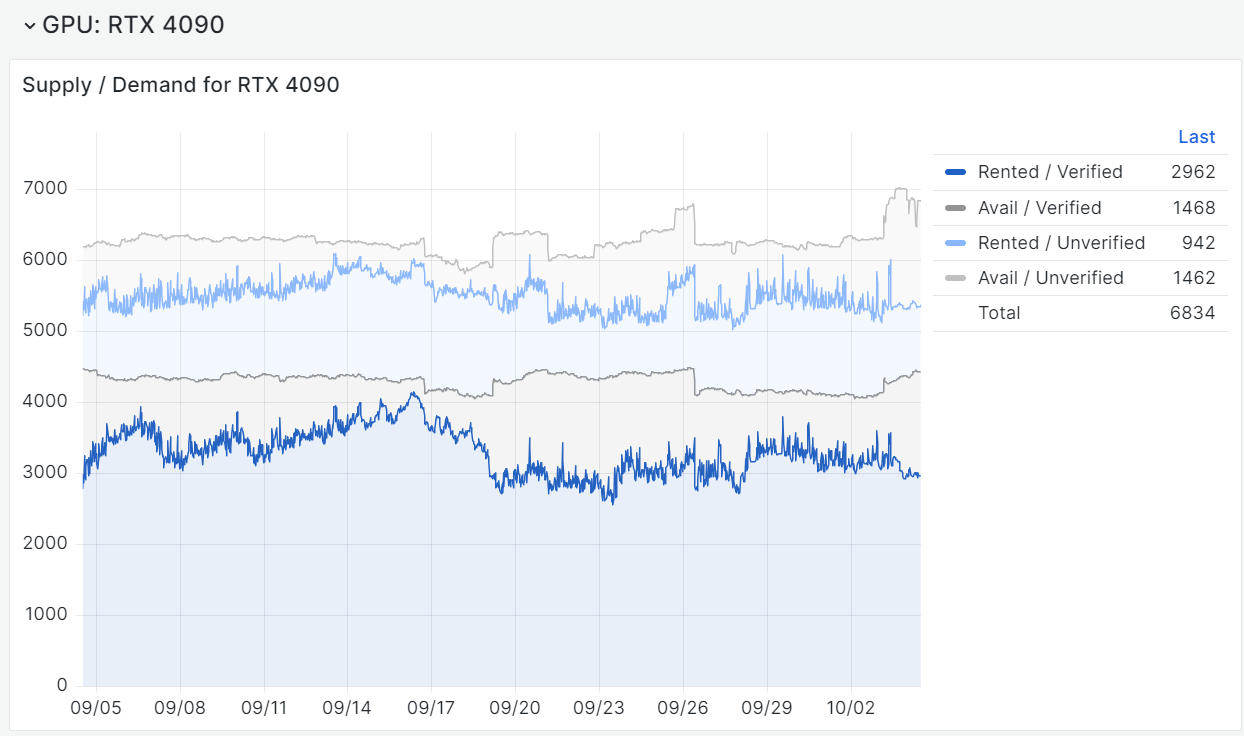

In recent years, with the growing demand for GPU compute due to AI and ML workloads, on-demand GPU platforms have emerged. These platforms offer an alternative to traditional cloud by renting out "pods" or GPU instances on an hourly basis. They provide a superior user experience—spin up times are faster, no quota approvals, and everything is ready in seconds using containerized environments like Docker. But what's even more significant is that these platforms utilize a diverse set of GPUs, including consumer models like the RTX 4090.

These on-demand platforms host infrastructure in smaller data centers, colocation facilities, or even individual homes (sometimes called "community cloud" setups). This keeps operational costs low and allows the use of consumer-grade GPUs that traditional cloud providers avoid. These consumer GPUs offer compelling performance at a fraction of the cost compared to enterprise GPUs, and they drive the cost efficiency of on-demand platforms.

I wrote a blog post for Runpod on benchmarking these cards for inference, and they have since ran some benchmarks on vLLM.

| GPU | H100 NVL PCIe | RTX 4090 |

|---|---|---|

| Price | $26,400 | $1,600 |

| FP32 TFLOPS | 60 TFLOPS | 82.58 TFLOPS |

| Power | 350-400W | 450W |

| Memory Size / Type | 94GB HBM3 | 24GB GDDR6X |

| Memory Bandwidth | 3.94 TB/s | 1.01 TB/s |

The RTX 4090 punches way above its weight class in AI workloads like inferencing. Isn’t it against the NVIDIA terms of service to use a consumer GPU in the data center? Well…that is tricky. The license is for GeForce software and drivers. In Linux, you can use the NVIDIA open-source drivers. NVIDIA makes using a consumer GPU like an RTX 4090 on a server challenging by physically making the GPU form factor not compatible in size (not standard PCIe form factor) and power connector placement (top of the card vs. back for data center and workstation cards).

That being said, that hasn’t stoped us or tens of thousands of others of using these gaming monsters for AI workloads in on-demand platforms like Runpod, vast.ai, and TensorDock. When people talk about the CUDA “moat”, it really is the ability to compile code and run it against the millions of consumer cards out in the wild.

Enter DePIN: Decentralizing the Edge

DePIN is the next step in this evolution—an approach that combines decentralized principles with the potential of GPU computing at the edge. Unlike on-demand compute marketplaces, DePIN brings tokenomics and a decentralized model into the picture. This reduces costs even further by eliminating intermediaries, decentralizing the physical infrastructure, and incentivizing participation through crypto-economic rewards.

Tenets of DePIN as told by current projects

- Decentralization: Distributes control and decision-making away from a central authority to a network of participants.

- Incentivization: Utilizes tokenomics or other incentive mechanisms to reward participants for contributing resources or maintaining the network.

- Tokenization: Represents ownership, access rights, or other utilities through digital tokens on a blockchain.

- Interoperability: Ensures that different network components can work together seamlessly, often through standardized protocols.

- Security and Privacy: Implements robust security measures to protect data and infrastructure while ensuring user privacy.

- Scalability: Designs the network to handle increasing amounts of work or to be easily expanded.

- Transparency: Maintains open and verifiable records of transactions and operations on the blockchain.

- Resilience: Ensures the network can withstand and recover from disruptions or attacks.

- Community Governance: Empowers network participants to have a say in decision-making processes through decentralized governance models.

- Sustainability: Focuses on long-term viability by considering environmental, economic, and social factors.

However, building DePIN networks that deliver on security, scalability, and reliability is a tall order. Cloud giants like AWS, Google Cloud, and Azure can justify their high costs with guarantees of uptime and security, that decentralized networks still struggle to match. Hyperscalers also lead in using renewable energy and have large sustainability commitments.

Trade-offs and Opportunities

While DePIN promises lower costs by cutting out centralized control and the associated "platform rake," it comes at the cost of reduced reliability and durability. This is why, for mission-critical workloads where downtime can be career-ending, traditional cloud platforms still command a high premium. Moreover, scalability is another area where DePIN is catching up but isn’t yet on par with the hyperscale infrastructure of centralized cloud providers.

On the other hand, for many inference tasks and AI experimentation, cost is the critical factor. DePIN networks can drive massive savings compared to cloud services, providing a compelling edge solution—especially for users willing to accept a slightly higher level of risk for the benefit of substantially lower costs.

Summary: Why Inference Will End Up at the Edge with DePIN

Inference is increasingly moving to the edge, and DePIN is leading this shift due to its cost efficiency and decentralized model. Unlike traditional cloud solutions, which struggle to keep costs predictable and manageable, DePIN leverages distributed infrastructure to bring compute resources closer to where data is generated. As we saw DePIN can leverage consumer GPUs which offer great entry level AI workload performance and some of the best cost per token. As AI workloads continue to grow, the need for low-cost, scalable inference will push more tasks to the edge, where DePIN networks can thrive by providing a decentralized, permissionless, and cost-effective alternative. For many non-critical AI tasks, the benefits of cost and proximity outweigh the limitations of decentralized reliability, making DePIN the ideal platform for edge inference.